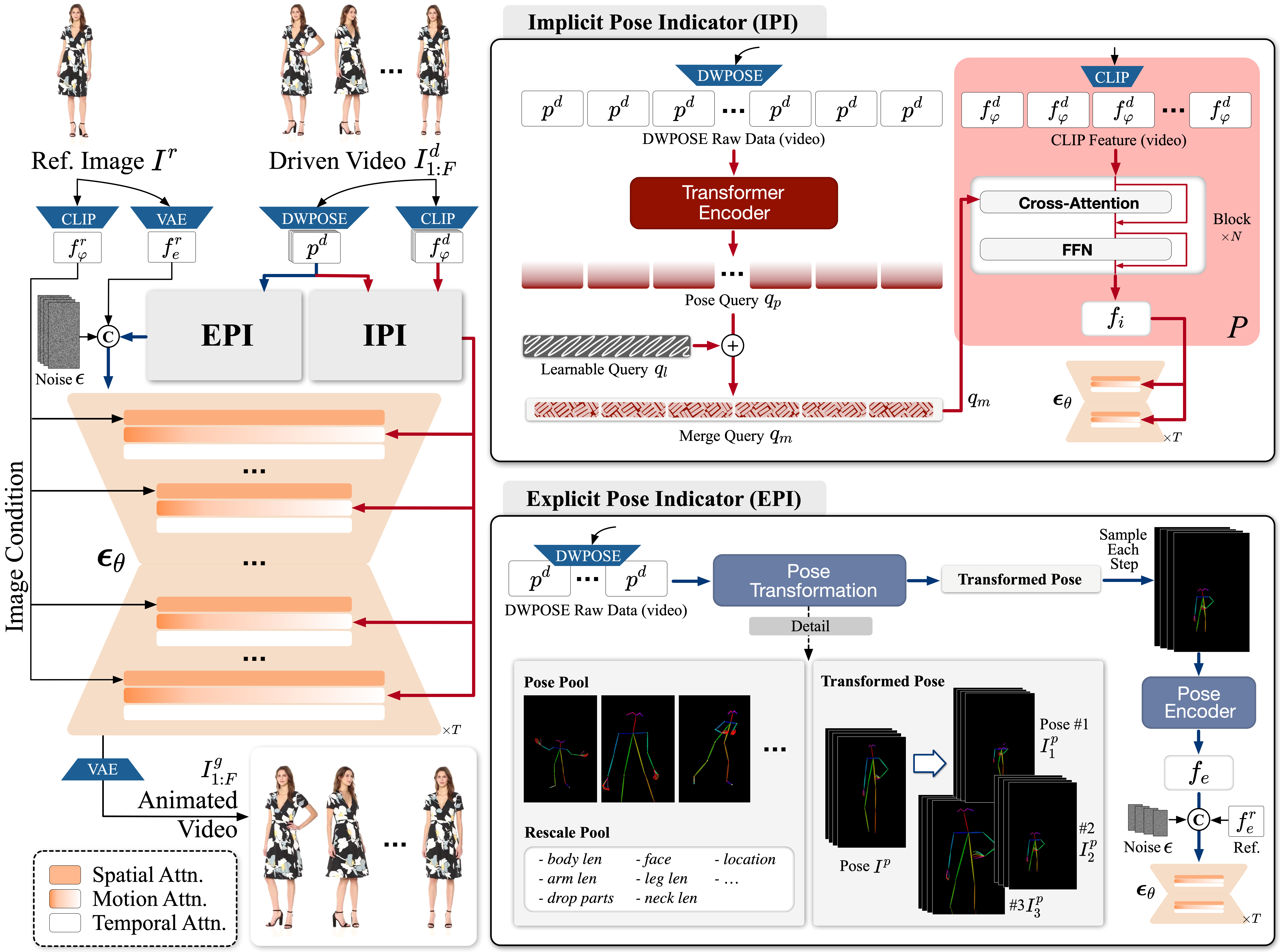

Character image animation, which generates high-quality videos from a reference image and target pose sequence, has seen significant progress in recent years. However, most existing methods only apply to human figures, which usually do not generalize well on anthropomorphic characters commonly used in industries like gaming and entertainment. Our in-depth analysis suggests to attribute this limitation to their insufficient modeling of motion, which is unable to comprehend the movement pattern of the driving video, thus imposing a pose sequence rigidly onto the target character. To this end, this paper proposes \(\texttt{Animate-X}\), a universal animation framework based on LDM for various character types (collectively named \(\texttt{X}\)), including anthropomorphic characters. To enhance motion representation, we introduce the Pose Indicator, which captures comprehensive motion pattern from the driving video through both implicit and explicit manner. The former leverages CLIP visual features of a driving video to extract its gist of motion, like the overall movement pattern and temporal relations among motions, while the latter strengthens the generalization of LDM by simulating possible inputs in advance that may arise during inference. Moreover, we introduce a new Animated Anthropomorphic Benchmark (\(\texttt{$A^2$Bench}\)) to evaluate the performance of \(\texttt{Animate-X}\) on universal and widely applicable animation images. Extensive experiments demonstrate the superiority and effectiveness of \(\texttt{Animate-X}\) compared to state-of-the-art methods.

@inproceedings{AnimateX2025,

title={Animate-X: Universal Character Image Animation with Enhanced Motion Representation},

author={Tan, Shuai and Gong, Biao and Wang, Xiang and Zhang, Shiwei and Zheng, Dandan and Zheng, Ruobin and Zheng, Kecheng and Chen, Jingdong and Yang, Ming},

booktitle={International Conference on Learning Representations},

year={2025}}

@article{AnimateX++2025,

title={Animate-X++: Universal Character Image Animation with Dynamic Backgrounds},

author={Tan, Shuai and Gong, Biao and Liu, Zhuoxin and Wang, Yan and Feng, Yifan and Zhao, Hengshuang},

journal={arXiv preprint arXiv:2508.09545},

year={2025}

}

@article{tan2025SynMotion,

title={SynMotion: Semantic-Visual Adaptation for Motion Customized Video Generation},

author={Tan, Shuai and Gong, Biao and Wei, Yujie and Zhang, Shiwei and Liu, Zhuoxin and Zheng, Dandan and Chen, Jingdong and Wang, Yan and Ouyang, Hao and Zheng, Kecheng and Shen, Yujun},

journal={arXiv preprint arXiv:2506.23690},

year={2025}

}

@inproceedings{Mimir2025,

title={Mimir: Improving Video Diffusion Models for Precise Text Understanding},

author={Tan, Shuai and Gong, Biao and Feng, Yutong and Zheng, Kecheng and Zheng, Dandan and Shi, Shuwei and Shen, Yujun and Chen, Jingdong and Yang, Ming},

booktitle={Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition},

year={2025}}

@article{wang2024Unianimate,

title={Unianimate: Taming Unified Video Diffusion Models for Consistent Human Image Animation},

author={Wang, Xiang and Zhang, Shiwei and Gao, Changxin and Wang, Jiayu and Zhou, Xiaoqiang and Zhang, Yingya and Yan, Luxin and Sang, Nong},

journal={arXiv preprint arXiv:2406.01188},

year={2024}}

@article{CoDance2025,

title={CoDance: An Unbind-Rebind Paradigm for Robust Multi-Subject Animation},

author={Tan, Shuai and Gong, Biao and Ma, Ke and Feng, Yutong and Zhang, Qiyuan and Wang, Yan and Shen, Yujun and Zhao, Hengshuang},

journal={arXiv preprint arXiv:2601.11096},

year={2025}

}